Member-only story

Building a sentiment classification model

Did the movie get a positive or negative review?

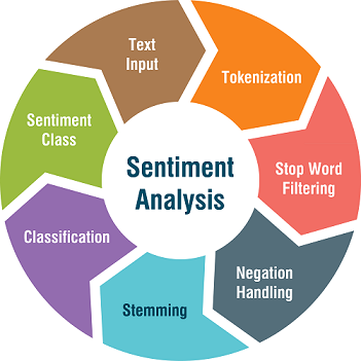

Sentiment classification uses natural language processing and machine learning to interpret emotions in the inputted data. It is a text analysis technique that detects polarity.

This article would explain the steps to building a sentiment classification model using the “IMDB dataset of 50k movie reviews”.

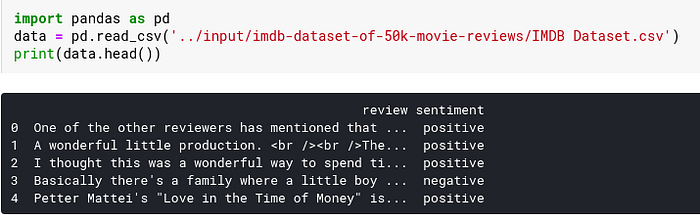

Understanding the dataset

We convert the data in the form of a csv file to a data frame and make note of the data type in each column.

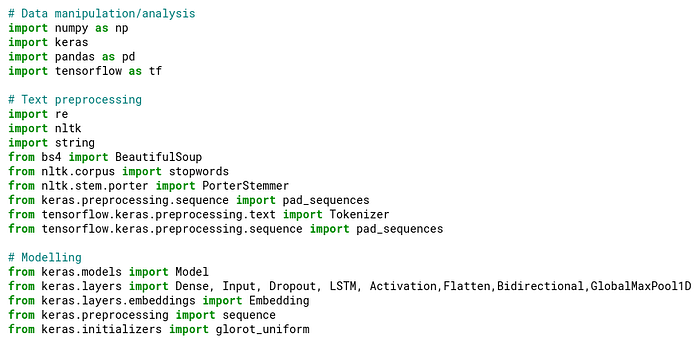

Importing libraries and packages

All necessary libraries and packages for text preprocessing and building the model are imported.

Text preprocessing

This process refers to steps taken to transfer text from human language to a machine-readable format for further analysis. The better your text is preprocessed, the more accurate the…